What is Airflow? A complete guide .

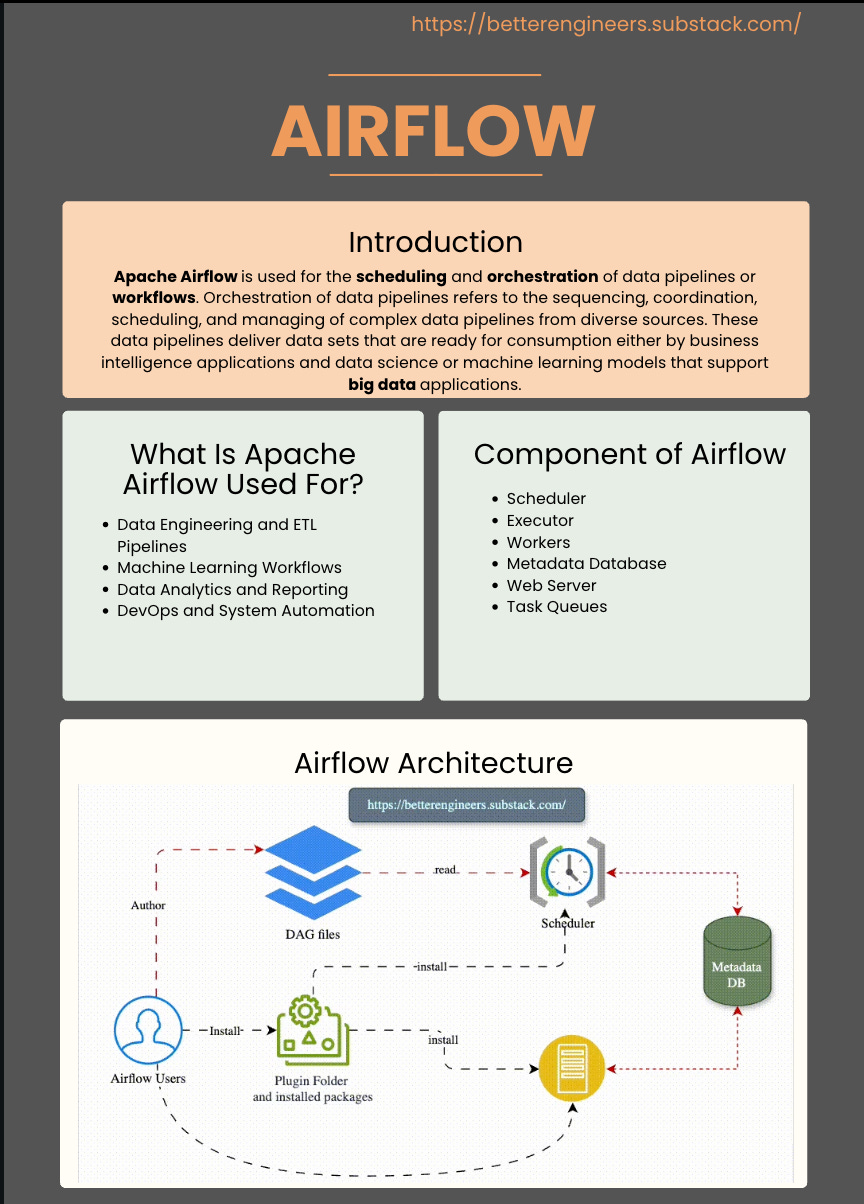

Apache Airflow is used for the scheduling and orchestration of data pipelines or workflows. Orchestration of data pipelines refers to the sequencing, coordination, scheduling, and managing of complex data pipelines from diverse sources. These data pipelines deliver data sets that are ready for consumption either by business intelligence applications and data science or machine learning models that support big data applications.

Do you know below concepts ?

Designing a Robust Distributed Logging System for Modern Applications

20 System Design Concepts Every Developer Should Know - Part - I

What you will learn today ?

Airflow

DAG

Components of DAG

How to run a basic Airflow job?

How to Run a DAG with config

What is Workflow/ DAG

The term DAG stands for Directed Acyclic Graph. Strictly speaking, a DAG is a mathematical concept and nothing particularly innovative in and of itself.

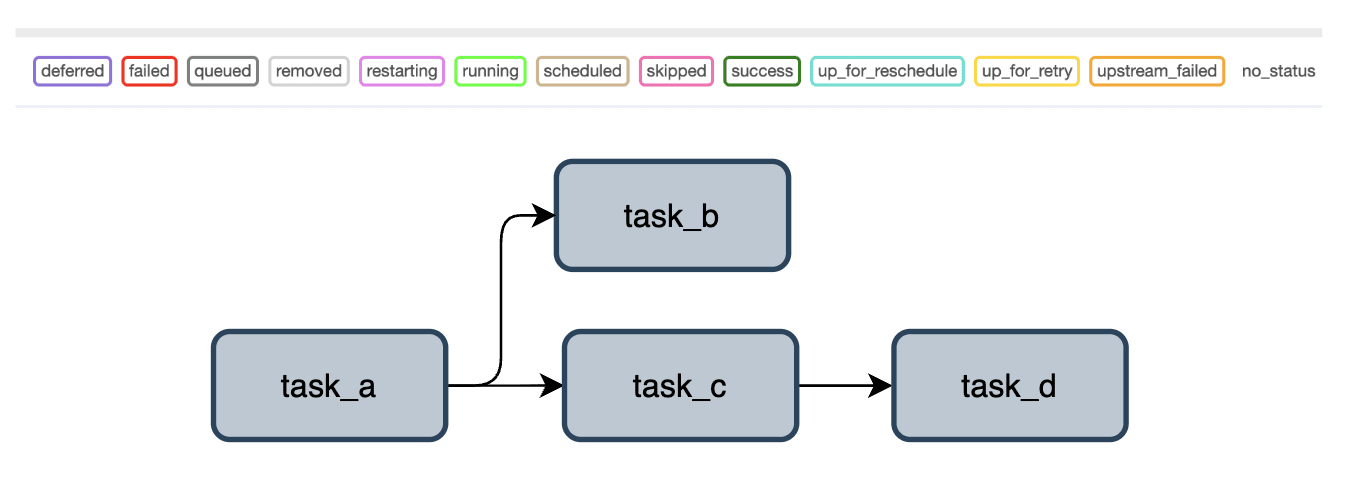

The structure on the left in the image below is a graph made up of nodes, or vertices, and edges connecting the nodes.

In a directed graph, each connection, or edge, has a direction, as indicated by the arrows in the image in the center.

A directed acyclic graph does not allow cyclic relationships between nodes, like the one you can see in the diamond-shaped part of the directed graph in the middle. In technical terms, one would say the Graph G = (V, E) is defined as the set of vertices V and edges E.

An Airflow DAG :

from airflow.models import DAG

from airflow.utils.dates import days_ago

with DAG(

“etl_sales_daily”,

start_date=days_ago(1),

schedule_interval=None,

) as dag:

...A DAG is not a data pipeline itself; it’s a structure that tells Airflow how to run each task in the workflow. DAGs provide several advantages:

Dynamic Scheduling: DAGs can be dynamically constructed using Python code.

Task Dependencies: DAGs define complex dependencies between tasks, ensuring they are executed in the correct order.

This is how a graphical representation of this DAG looks like:

What Is Apache Airflow Used For?

Here are some of the main uses of Apache Airflow.

Data Engineering and ETL Pipelines

Airflow is useful for the creation and management of ETL (Extract, Transform, Load) pipelines. It allows data engineers to automate the extraction of data from various sources, transform it using custom logic, and load it into data warehouses or databases. Its ability to handle complex dependencies and conditional execution makes it suitable for ETL processes, ensuring data is processed in the correct sequence and any errors can be easily identified and resolved.

Machine Learning Workflows

Machine learning workflows often involve multiple stages, from data preprocessing to model training and evaluation. Apache Airflow can orchestrate these stages, ensuring each step is completed before the next begins. This is particularly useful for automating repetitive tasks such as data cleaning, feature extraction, and model deployment.

Data Analytics and Reporting

Organizations rely on timely and accurate data analytics for decision-making. Apache Airflow can automate the execution of data analysis scripts and the generation of reports. By scheduling tasks to run at specified intervals, Airflow ensures that reports are generated with the most recent data. This helps in maintaining up-to-date dashboards and data visualizations.

DevOps and System Automation

Airflow is also used in DevOps for automating and managing system tasks. This includes database backups, log file analysis, and system health checks. By defining these tasks as workflows, organizations can ensure that maintenance activities are performed regularly and can easily monitor their status. It reduces the risk of human error and ensures consistency.

Airflow Architecture and Key Components

Scheduler

The Scheduler is the brain of Airflow. Its role is to continuously monitor DAGs and schedule tasks based on the defined execution time and dependencies. The Scheduler determines what tasks should run, when they should run, and submits them to the Executor for execution.

In distributed environments, the Scheduler can be scaled to handle large workflows and increase throughput.

Executor

The Executor is responsible for executing the tasks that the Scheduler schedules. There are several types of Executors available, depending on your use case and scale:

SequentialExecutor: The simplest Executor, it executes one task at a time. While not suitable for large-scale workflows, it’s often used for testing purposes or very small workflows where concurrent execution isn’t necessary.

LocalExecutor: Executes tasks on the same machine where the Airflow instance runs. Ideal for small workloads.

CeleryExecutor: Allows distributing tasks across multiple worker machines, making it suitable for large-scale workflows.

KubernetesExecutor: Executes tasks in Kubernetes pods, providing excellent scalability and isolation.

Executors manage the execution of tasks and ensure their successful completion.

Workers

Workers are the machines (or pods in Kubernetes) where the actual tasks are executed. They perform the processing by pulling tasks from the task queue and running them. For environments using Celery or Kubernetes Executors, workers can be scaled dynamically to handle fluctuating workloads.

Metadata Database

The Metadata Database is the heart of Airflow’s state management. It stores all the metadata about DAGs, tasks, task instances, execution history, logs, and other configurations. Airflow typically uses a relational database like PostgreSQL or MySQL for metadata storage.

This database helps Airflow to keep track of which tasks are running, failed, or completed, allowing it to retry failed tasks and manage complex workflows.

Web Server

The Web Server component provides a user interface to interact with Airflow. It’s built using Flask and allows users to view DAGs, monitor task progress, check logs, and manage workflows. The intuitive UI enables easy management of workflows without writing additional code.

Some key features of the Web UI include:

DAG Visualization: Provides a graphical representation of DAGs and tasks.

Task Monitoring: Shows real-time task execution status (running, queued, failed).

Logs Access: Enables easy access to task logs for debugging.

Task Queues

Task Queues are used to decouple task scheduling from task execution. When a task is scheduled, it’s placed in a queue, where it waits until a worker picks it up for execution. In the CeleryExecutor, for example, a message broker (e.g., RabbitMQ or Redis) is used to manage task queues.

How to Write a DAG in Airflow

Keep reading with a 7-day free trial

Subscribe to Better Engineers to keep reading this post and get 7 days of free access to the full post archives.