Youtube System Design

Functional Requirements

Viewers’ Requirements:

Immediate video playback upon request

Consistent viewing experience regardless of traffic spikes

Compatibility across different devices and network speeds

Creators’ Requirements:

Straightforward video upload URL procurement

Efficient file transfer to the server for various video sizes

Confirmation of successful upload and encoding

Non-Functional Requirements

100M Daily Active Users

Read:write ratio = 100:1

Each user watches 5 videos a day on average

Each creator uploads 1 video a day on average

Assuming that each video is 500MB in size.

Data retention for 10 years

Low latency

Scalability

High availability

Capacity Planning

We will calculate QPS and storage using our Capacity Calculator.

QPS

QPS = Total number of query requests per day / (Number of seconds in a day) Given that the DAU (Daily Active Users) is 100M, with each user performing 1 write operation per day, and a Read:Write ratio of 100:1, the daily number of read requests is: 100M * 100 = 10B. Therefore, Read QPS = 10B / (24 * 3600) ≈ 115740.Storage

Storage = Total data volume per day * Number of days retained Given that the size of each video is 500MB, and the daily number of write requests is 100M, the daily data volume is: 100M * 500MB. To retain data for 10 years, the required storage space is: 100M * 500MB * 365 * 10 ≈ 183EB.

Bottleneck

Identifying bottlenecks requires analyzing peak load times and user distribution. The largest bottleneck would likely be in video delivery, which can be mitigated using CDNs and efficient load balancing strategies across the network.

When planning for CDN costs, the focus is on the delivery of content. Given the 100:1 read:write ratio, most of the CDN cost will be due to video streaming. With aggressive caching and geographic distribution, however, these costs can be optimized.

High-level Design

Building blocks and design

Based on the requirements, we will use the different elementary design problems in the advanced design problems as building blocks. Let’s identify the building blocks that will be an integral part of our design for the YouTube system. The key building blocks are given below:

Databases: Databases are required to store the metadata of videos, thumbnails, comments, and user-related information.

Blob storage: Blob storage is important for storing all videos on theplatform.

CDN: A CDN is used to effectively deliver content to end users, reducing delay and burden on end servers.

Load balancers: Load balancers are a necessity to distribute millions of

incoming clients requests among the pool of available servers.

Encoders and transcoders compress videos and transform them into different formats and qualities to support varying numbers of devices according to their screen resolution and bandwidth.

YouTube search

Since YouTube is one of the most visited websites, a large number of users will

be using the search feature. Even though we have covered a building block on distributed search, we’ll provide a basic overview of how search inside theYouTube system will work.

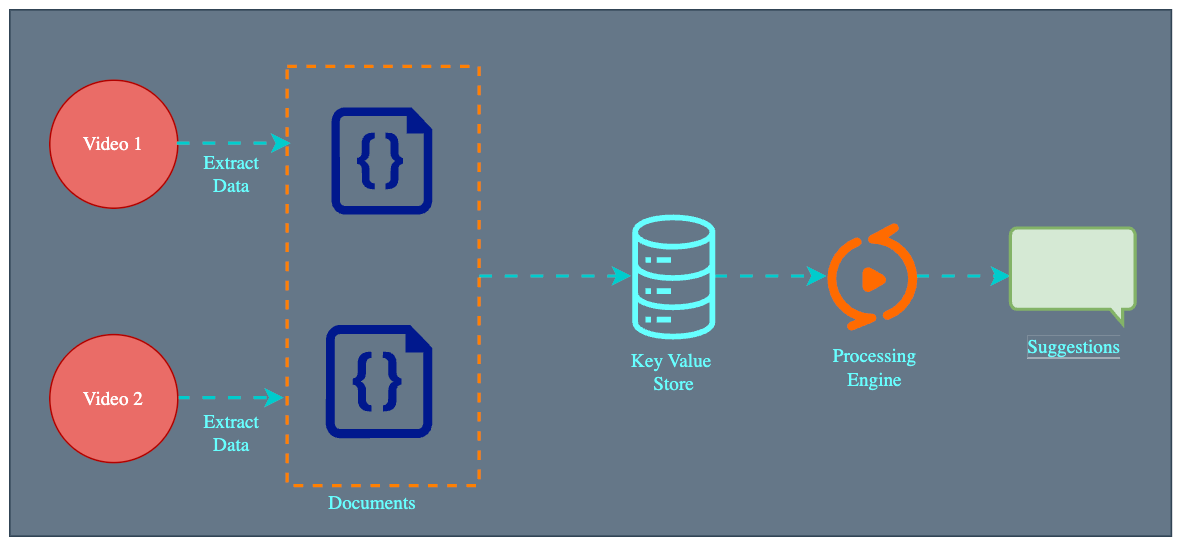

Each new video uploaded to YouTube will be processed for data extraction. We can use a JSON file to store extracted data, which includes the following:

Title of the video.

Channel name.

Description of the video.

The content of the video, possibly extracted from the transcripts.

Video length.Categories.Each of the JSON files can be referred to as a document. Next, keywords will be extracted from the documents and stored in a key-value store. The key in the key-value store will hold all the keywords searched by the users, while the value in the key-value store will contain the occurrence of each key, its frequency, and the location of the occurrence in the different documents. When

The high-level design of YouTube adopts a microservices architecture, breaking down the platform into smaller, interconnected services. This modular approach allows for independent scaling, deployment, and better fault isolation.

Fulfilling requirements

Our proposed design needs to fulfill the requirements we defined. Our main requirements are smooth streaming (low latency), availability, and reliability.

Let’s discuss them one by one.

Low latency/smooth streaming: This can be achieved through these strategies: Geographically distributed cache servers at the ISP level to keep the most viewed content.Choosing appropriate storage systems for different types of data. For example, we’ll can use Bigtable for thumbnails, blob storage for videos, and so on. Using caching at various layers via a distributed cache management system.Utilizing CDNs that make heavy use of caching and mostly serve videos out of memory. A CDN deploys its services in close vicinity to the end users for low-latency services.

Scalability: We’ve taken various steps to ensure scalability in our design. The horizontal scalability of web and application servers will not be a problem as the users grow. However, MySQL storage cannot scale beyond a certain point. As we’ll see in the coming sections, that may require some restructuring.

Availability: The system can be made available through redundancy by replicating data to as many servers as possible to avoid a single point of failure. Replicating data across data centers will ensure high availability, even if an entire datacenter fails because of power or network issues. Furthermore, local load balancers can exclude any dead servers, and global load balancers can steer traffic to a different region if the need arises.

Reliability: YouTube’s system can be made reliable by using data partitioning and fault-tolerance techniques. Through data partitioning, the nonavailability of one type of data will not affect others. We can use redundant hardware and software components for fault tolerance. Furthermore, we can use the heartbeat protocol to monitor the health of servers and omit servers that are faulty and erroneous. We can use a variant of consistent hashing to add or remove servers seamlessly and reduce the burden on specific servers in case of nonuniform load.

How can we handle processing a video to support adaptive bitrate streaming?

1. Problem & Requirements

Core functional requirements

Users can upload videos.

Users can watch (stream) videos.

Everything else (search, comments, recommendations, subscriptions, channels, etc.) is explicitly “below the line” and out of scope.

Key non-functional requirements

High availability (AP > C in CAP terms).

Handle large videos (tens of GB).

Low-latency streaming, even on bad networks.

Scale to roughly 1M uploads/day and 100M views/day.

Resumable uploads.

2. Core entities & APIs

Entities

User – uploader or viewer.

Video – logical video record.

VideoMetadata – title, description, uploader, plus URLs for content/transcript/manifest, etc.

Initial API sketch