Learn Apache Kafka From Basics Part — 1

In this series, I will let you know the benefits of Apache Kafka from the basics and how to Integrate into Client and Server.

In this series, I will let you know the benefits of Apache Kafka from the basics and how to Integrate into Client and Server.

What is Apache Kafka?

Apache Kafka is a highly popular distributed streaming platform known for its scalability, durability, and fault tolerance.

Here are several reasons why Apache Kafka is commonly used in distributed system designs:

Scalability: Kafka is designed for horizontal scalability, allowing it to handle large volumes of data and high throughput rates. It can seamlessly scale out across multiple nodes in a cluster to accommodate growing data ingestion and processing demands.

2. Durability and Persistence: Kafka provides durable storage for messages, ensuring that data is not lost even in the event of system failures or crashes. Messages are persisted to disk, making Kafka suitable for use cases where data integrity and reliability are paramount.

3. High Throughput and Low Latency: Kafka’s architecture is optimized for high throughput and low latency, making it ideal for real-time data streaming and processing applications. It can efficiently handle millions of messages per second with minimal processing overhead.

4. Fault Tolerance: Kafka offers built-in fault tolerance mechanisms to ensure system resilience and availability. It replicates data across multiple broker nodes in a cluster, enabling automatic failover and recovery in case of node failures without data loss or downtime.

5. Message Retention and Partitioning: Kafka supports configurable message retention policies, allowing messages to be retained for a specified period or size. It also partitions data across multiple partitions within topics, enabling parallel processing and load distribution across consumers.

Apache Kafka is an event streaming platform.

What does that mean?

Kafka combines three key capabilities so you can implement your use cases for event streaming end-to-end with a single battle-tested solution:

To publish (write) and subscribe to (read) streams of events, including continuous import/export of your data from other systems.

To store streams of events durably and reliably for as long as you want.

To process streams of events as they occur or retrospectively.

How does Kafka work in a nutshell?

Kafka is a distributed system consisting of servers and clients that communicate via a high-performance TCP network protocol. It can be deployed on bare-metal hardware, virtual machines, and containers in on-premise as well as cloud environments.

Main terminology

Events:

Events represent individual units of data or messages that are produced and consumed within Kafka. These can be any type of data, such as logs, user actions, system events, or sensor readings.

Events are typically organized into topics, which act as logical channels or categories for storing and processing related messages.

Producers:

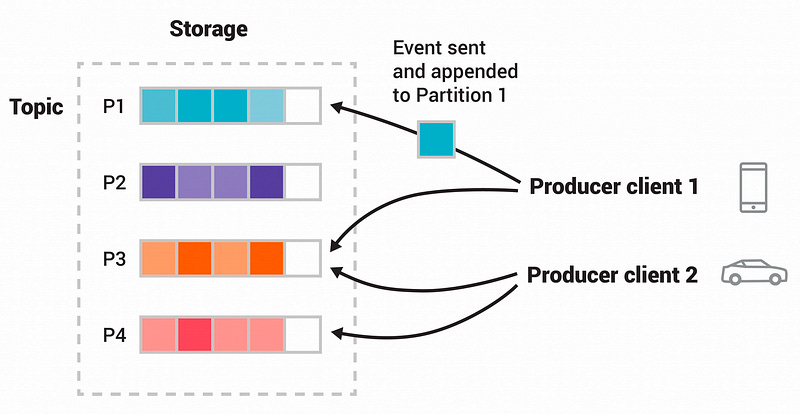

Producers are responsible for generating and publishing events to Kafka topics. They produce data streams by sending messages to Kafka brokers, which then distribute these messages across partitions within topics.

Producers can be applications, services, or systems that generate data in real-time or batch mode and publish it to Kafka for consumption by downstream consumers.

Producers can also specify the partition to which they want to send messages, or Kafka can use a partitioning strategy to determine the target partition based on message keys or other criteria.

Consumers:

Consumers are applications or processes that subscribe to Kafka topics and consume events from them. They read messages from Kafka brokers and process them based on their business logic.

Consumers can be part of real-time data processing pipelines, analytics applications, monitoring systems, or any other component that requires access to streaming data.

Consumers can belong to consumer groups, which allow multiple consumers to work together to process data in parallel and achieve higher throughput and scalability. Each consumer group subscribes to one or more partitions within a topic, and Kafka ensures that messages within a partition are processed in the order they were received.

Kafka provides offset management to track the consumption progress of each consumer within a consumer group, ensuring fault tolerance and enabling efficient recovery in case of failures.

How to create a Producer and Consumer?

Hit Clap and I will publish Producer and Consumer Client with a series.

Apache Kafka Producer — Implementation

In my previous story, we learned about the Basics of Apache Kafka, why we should use and its benefits.

In this part, I…medium.com